SDS-2.2, Scalable Data Science

Archived YouTube video of this live unedited lab-lecture:

This is Raaz's update of Siva's whirl-wind compression of the free Google's DL course in Udacity https://www.youtube.com/watch?v=iDyeK3GvFpo for Adam Briendel's DL modules that will follow.

Deep learning: A Crash Introduction

This notebook provides an introduction to Deep Learning. It is meant to help you descend more fully into these learning resources and references:

- Udacity's course on Deep Learning https://www.udacity.com/course/deep-learning--ud730 by Google engineers: Arpan Chakraborty and Vincent Vanhoucke and their full video playlist:

- Neural networks and deep learning http://neuralnetworksanddeeplearning.com/ by Michael Nielsen

- Deep learning book http://www.deeplearningbook.org/ by Ian Goodfellow, Yoshua Bengio and Aaron Courville

- Deep learning - buzzword for Artifical Neural Networks

- What is it?

- Supervised learning model - Classifier

- Unsupervised model - Anomaly detection (say via auto-encoders)

- Needs lots of data

- Online learning model - backpropogation

- Optimization - Stochastic gradient descent

- Regularization - L1, L2, Dropout

- Supervised

- Fully connected network

- Convolutional neural network - Eg: For classifying images

- Recurrent neural networks - Eg: For use on text, speech

- Unsupervised

- Autoencoder

A quick recap of logistic regression / linear models

(watch now 46 seconds from 4 to 50):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Regression

y = mx + c

Another way to look at a linear model

-- Image Credit: Michael Nielsen

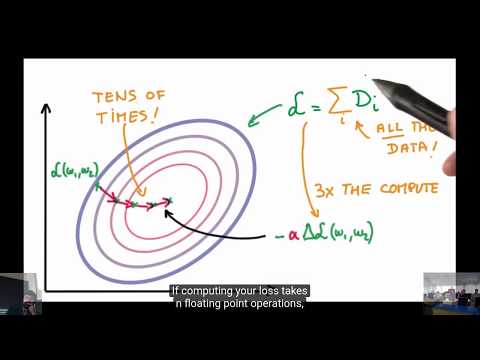

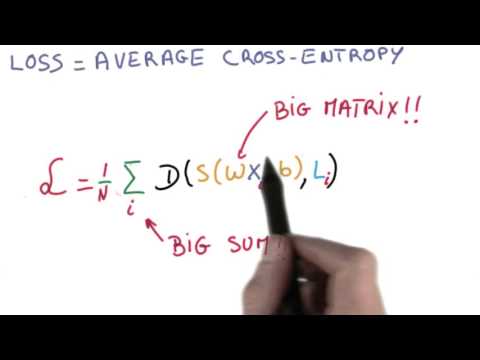

Recap - Gradient descent

(1:54 seconds):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

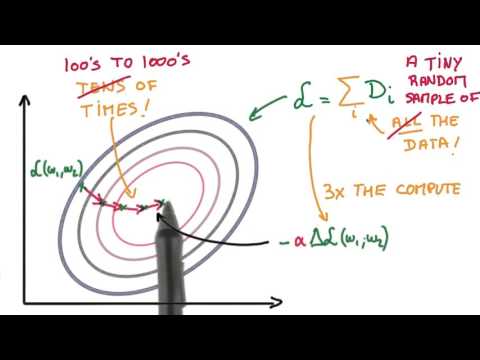

Recap - Stochastic Gradient descent

(2:25 seconds):

(1:28 seconds):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

HOGWILD! Parallel SGD without locks http://i.stanford.edu/hazy/papers/hogwild-nips.pdf

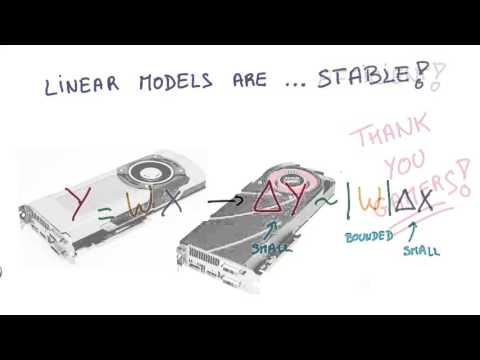

Why deep learning? - Linear model

(24 seconds - 15 to 39):

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

ReLU - Rectified linear unit or Rectifier - max(0, x)

-- Image Credit: Wikipedia

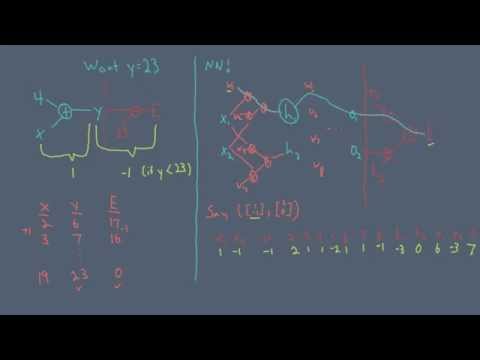

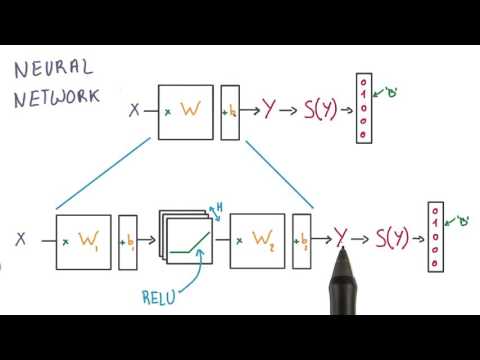

Neural Network

Watch now (45 seconds, 0-45)

***

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

***

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Is decision tree a linear model? http://datascience.stackexchange.com/questions/6787/is-decision-tree-algorithm-a-linear-or-nonlinear-algorithm

Neural Network

***

***

-- Image credit: Wikipedia

***

-- Image credit: Wikipedia

Multiple hidden layers

***

-- Image credit: Michael Nielsen

***

-- Image credit: Michael Nielsen

What does it mean to go deep? What do each of the hidden layers learn?

Watch now (1:13 seconds)

***

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

***

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Chain rule

Chain rule in neural networks

Watch later (55 seconds)

***

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

***

-- Video Credit: Udacity's deep learning by Arpan Chakraborthy and Vincent Vanhoucke

Backpropogation

To properly understand this you are going to minimally need 20 minutes or so, depending on how rusty your maths is now.

First go through this carefully: * https://stats.stackexchange.com/questions/224140/step-by-step-example-of-reverse-mode-automatic-differentiation

Watch later (9:55 seconds)

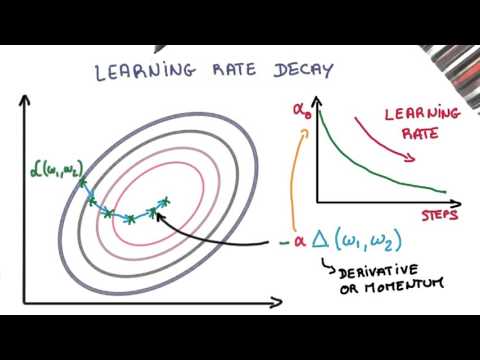

How do you set the learning rate? - Step size in SGD?

there is a lot more... including newer frameworks for automating these knows using probabilistic programs (but in non-distributed settings as of Dec 2017).

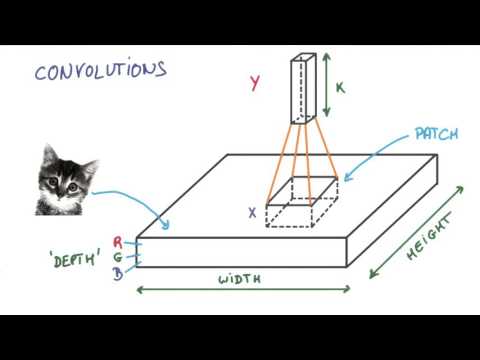

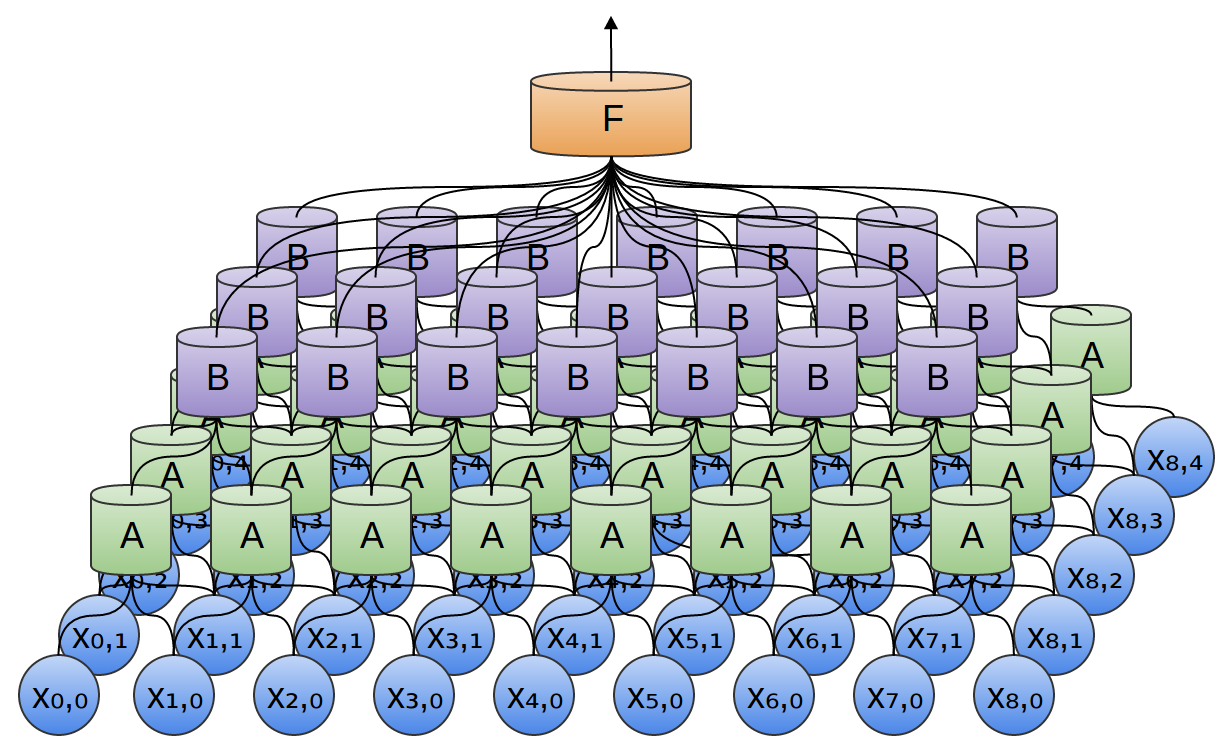

So far we have only seen fully connected neural networks, now let's move into more interesting ones that exploit spatial locality and nearness patterns inherent in certain classes of data, such as image data.

Convolutional Neural Networks

- Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton - https://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

- Convolutional Neural networks blog - http://colah.github.io/posts/2014-07-Conv-Nets-Modular/

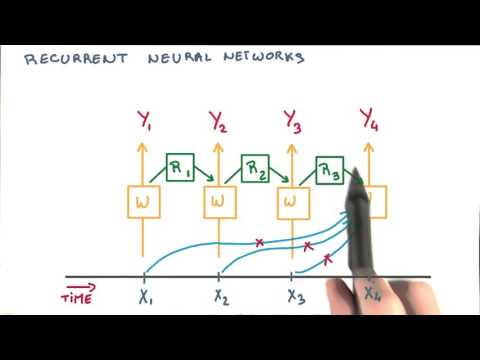

Recurrent neural network

http://colah.github.io/posts/2015-08-Understanding-LSTMs/

http://colah.github.io/posts/2015-08-Understanding-LSTMs/

http://karpathy.github.io/2015/05/21/rnn-effectiveness/

LSTM - Long short term memory

GRU - Gated recurrent unit

http://arxiv.org/pdf/1406.1078v3.pdf

http://arxiv.org/pdf/1406.1078v3.pdf

Autoencoder

The more recent improvement over CNNs are called capsule networks by Hinton. Check them out here if you want to prepare for your future interview question in 2017/2018 or so...: