SDS-2.2, Scalable Data Science

Archived YouTube video of this live unedited lab-lecture:

Topic Modeling of Movie Dialogs with Latent Dirichlet Allocation

Let us cluster the conversations from different movies!

This notebook will provide a brief algorithm summary, links for further reading, and an example of how to use LDA for Topic Modeling.

not tested in Spark 2.2 yet (see 034 notebook for syntactic issues, if any)

Algorithm Summary

- Task: Identify topics from a collection of text documents

- Input: Vectors of word counts

- Optimizers:

- EMLDAOptimizer using Expectation Maximization

- OnlineLDAOptimizer using Iterative Mini-Batch Sampling for Online Variational Bayes

Links

- Spark API docs

- MLlib Programming Guide

- ML Feature Extractors & Transformers

- Wikipedia: Latent Dirichlet Allocation

Readings for LDA

- A high-level introduction to the topic from Communications of the ACM

- A very good high-level humanities introduction to the topic (recommended by Chris Thomson in English Department at UC, Ilam):

Also read the methodological and more formal papers cited in the above links if you want to know more.

Let's get a bird's eye view of LDA from https://www.cs.princeton.edu/~blei/papers/Blei2012.pdf next.

- See pictures (hopefully you read the paper last night!)

- Algorithm of the generative model (this is unsupervised clustering)

- For a careful introduction to the topic see Section 27.3 and 27.4 (pages 950-970) pf Murphy's Machine Learning: A Probabilistic Perspective, MIT Press, 2012.

- We will be quite application focussed or applied here!

Probabilistic Topic Modeling Example

This is an outline of our Topic Modeling workflow. Feel free to jump to any subtopic to find out more.

- Step 0. Dataset Review

- Step 1. Downloading and Loading Data into DBFS

- (Step 1. only needs to be done once per shard - see details at the end of the notebook for Step 1.)

- Step 2. Loading the Data and Data Cleaning

- Step 3. Text Tokenization

- Step 4. Remove Stopwords

- Step 5. Vector of Token Counts

- Step 6. Create LDA model with Online Variational Bayes

- Step 7. Review Topics

- Step 8. Model Tuning - Refilter Stopwords

- Step 9. Create LDA model with Expectation Maximization

- Step 10. Visualize Results

Step 0. Dataset Review

In this example, we will use the Cornell Movie Dialogs Corpus.

Here is the README.txt:

Cornell Movie-Dialogs Corpus

Distributed together with:

"Chameleons in imagined conversations: A new approach to understanding coordination of linguistic style in dialogs" Cristian Danescu-Niculescu-Mizil and Lillian Lee Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics, ACL 2011.

(this paper is included in this zip file)

NOTE: If you have results to report on these corpora, please send email to [email protected] or [email protected] so we can add you to our list of people using this data. Thanks!

Contents of this README:

A) Brief description

B) Files description

C) Details on the collection procedure

D) Contact

A) Brief description:

This corpus contains a metadata-rich collection of fictional conversations extracted from raw movie scripts:

- 220,579 conversational exchanges between 10,292 pairs of movie characters

- involves 9,035 characters from 617 movies

- in total 304,713 utterances

- movie metadata included:

- genres

- release year

- IMDB rating

- number of IMDB votes

- IMDB rating

- character metadata included:

- gender (for 3,774 characters)

- position on movie credits (3,321 characters)

B) Files description:

In all files the field separator is " +++$+++ "

movietitlesmetadata.txt

- contains information about each movie title

- fields:

- movieID,

- movie title,

- movie year,

- IMDB rating,

- no. IMDB votes,

- genres in the format ['genre1','genre2',...,'genreN']

moviecharactersmetadata.txt

- contains information about each movie character

- fields:

- characterID

- character name

- movieID

- movie title

- gender ("?" for unlabeled cases)

- position in credits ("?" for unlabeled cases)

movie_lines.txt

- contains the actual text of each utterance

- fields:

- lineID

- characterID (who uttered this phrase)

- movieID

- character name

- text of the utterance

movie*conversations.txt

- the structure of the conversations

- fields

- characterID of the first character involved in the conversation

- characterID of the second character involved in the conversation

- movieID of the movie in which the conversation occurred

- list of the utterances that make the conversation, in chronological order: ['lineID1','lineID2',...,'lineIDN'] has to be matched with movie*lines.txt to reconstruct the actual content

rawscripturls.txt

- the urls from which the raw sources were retrieved

C) Details on the collection procedure:

We started from raw publicly available movie scripts (sources acknowledged in rawscripturls.txt). In order to collect the metadata necessary for this study and to distinguish between two script versions of the same movie, we automatically matched each script with an entry in movie database provided by IMDB (The Internet Movie Database; data interfaces available at http://www.imdb.com/interfaces). Some amount of manual correction was also involved. When more than one movie with the same title was found in IMBD, the match was made with the most popular title (the one that received most IMDB votes)

After discarding all movies that could not be matched or that had less than 5 IMDB votes, we were left with 617 unique titles with metadata including genre, release year, IMDB rating and no. of IMDB votes and cast distribution. We then identified the pairs of characters that interact and separated their conversations automatically using simple data processing heuristics. After discarding all pairs that exchanged less than 5 conversational exchanges there were 10,292 left, exchanging 220,579 conversational exchanges (304,713 utterances). After automatically matching the names of the 9,035 involved characters to the list of cast distribution, we used the gender of each interpreting actor to infer the fictional gender of a subset of 3,321 movie characters (we raised the number of gendered 3,774 characters through manual annotation). Similarly, we collected the end credit position of a subset of 3,321 characters as a proxy for their status.

D) Contact:

Please email any questions to: [email protected] (Cristian Danescu-Niculescu-Mizil)

Step 2. Loading the Data and Data Cleaning

We have already used the wget command to download the file, and put it in our distributed file system (this process takes about 1 minute). To repeat these steps or to download data from another source follow the steps at the bottom of this worksheet on Step 1. Downloading and Loading Data into DBFS.

Let's make sure these files are in dbfs now:

// this is where the data resides in dbfs (see below to download it first, if you go to a new shard!)

display(dbutils.fs.ls("dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/"))

| path | name | size |

|---|---|---|

| dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/README.txt | README.txt | 4181.0 |

| dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_characters_metadata.txt | movie_characters_metadata.txt | 705695.0 |

| dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_conversations.txt | movie_conversations.txt | 6760930.0 |

| dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_lines.txt | movie_lines.txt | 3.4641919e7 |

| dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_titles_metadata.txt | movie_titles_metadata.txt | 67289.0 |

| dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/raw_script_urls.txt | raw_script_urls.txt | 56177.0 |

Conversations Data

// Load text file, leave out file paths, convert all strings to lowercase

val conversationsRaw = sc.textFile("dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_conversations.txt").zipWithIndex()

conversationsRaw: org.apache.spark.rdd.RDD[(String, Long)] = ZippedWithIndexRDD[50] at zipWithIndex at <console>:33

Review first 5 lines to get a sense for the data format.

conversationsRaw.top(5).foreach(println) // the first five Strings in the RDD

(u999 +++$+++ u1006 +++$+++ m65 +++$+++ ['L227588', 'L227589', 'L227590', 'L227591', 'L227592', 'L227593', 'L227594', 'L227595', 'L227596'],8954) (u998 +++$+++ u1005 +++$+++ m65 +++$+++ ['L228159', 'L228160'],8952) (u998 +++$+++ u1005 +++$+++ m65 +++$+++ ['L228157', 'L228158'],8951) (u998 +++$+++ u1005 +++$+++ m65 +++$+++ ['L228130', 'L228131'],8950) (u998 +++$+++ u1005 +++$+++ m65 +++$+++ ['L228127', 'L228128', 'L228129'],8949)

conversationsRaw.count // there are over 83,000 conversations in total

res0: Long = 83097

import scala.util.{Failure, Success}

val regexConversation = """\s*(\w+)\s+(\+{3}\$\+{3})\s*(\w+)\s+(\2)\s*(\w+)\s+(\2)\s*(\[.*\]\s*$)""".r

case class conversationLine(a: String, b: String, c: String, d: String)

val conversationsRaw = sc.textFile("dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_conversations.txt")

.zipWithIndex()

.map(x =>

{

val id:Long = x._2

val line = x._1

val pLine = regexConversation.findFirstMatchIn(line)

.map(m => conversationLine(m.group(1), m.group(3), m.group(5), m.group(7)))

match {

case Some(l) => Success(l)

case None => Failure(new Exception(s"Non matching input: $line"))

}

(id,pLine)

}

)

import scala.util.{Failure, Success} regexConversation: scala.util.matching.Regex = \s*(\w+)\s+(\+{3}\$\+{3})\s*(\w+)\s+(\2)\s*(\w+)\s+(\2)\s*(\[.*\]\s*$) defined class conversationLine conversationsRaw: org.apache.spark.rdd.RDD[(Long, Product with Serializable with scala.util.Try[conversationLine])] = MapPartitionsRDD[57] at map at <console>:40

conversationsRaw.filter(x => x._2.isSuccess).count()

res1: Long = 83097

conversationsRaw.filter(x => x._2.isFailure).count()

res66: Long = 0

The conversation number and line numbers of each conversation are in one line in conversationsRaw.

conversationsRaw.filter(x => x._2.isSuccess).take(5).foreach(println)

(0,Success(conversationLine(u0,u2,m0,['L194', 'L195', 'L196', 'L197']))) (1,Success(conversationLine(u0,u2,m0,['L198', 'L199']))) (2,Success(conversationLine(u0,u2,m0,['L200', 'L201', 'L202', 'L203']))) (3,Success(conversationLine(u0,u2,m0,['L204', 'L205', 'L206']))) (4,Success(conversationLine(u0,u2,m0,['L207', 'L208'])))

Let's create conversations that have just the coversation id and line-number with order information.

val conversations

= conversationsRaw

.filter(x => x._2.isSuccess)

.flatMap {

case (id,Success(l))

=> { val conv = l.d.replace("[","").replace("]","").replace("'","").replace(" ","")

val convLinesIndexed = conv.split(",").zipWithIndex

convLinesIndexed.map( cLI => (id, cLI._2, cLI._1))

}

}.toDF("conversationID","intraConversationID","lineID")

conversations: org.apache.spark.sql.DataFrame = [conversationID: bigint, intraConversationID: int, lineID: string]

conversations.show(15)

+--------------+-------------------+------+ |conversationID|intraConversationID|lineID| +--------------+-------------------+------+ | 0| 0| L194| | 0| 1| L195| | 0| 2| L196| | 0| 3| L197| | 1| 0| L198| | 1| 1| L199| | 2| 0| L200| | 2| 1| L201| | 2| 2| L202| | 2| 3| L203| | 3| 0| L204| | 3| 1| L205| | 3| 2| L206| | 4| 0| L207| | 4| 1| L208| +--------------+-------------------+------+ only showing top 15 rows

Movie Titles

val moviesMetaDataRaw = sc.textFile("dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_titles_metadata.txt")

moviesMetaDataRaw.top(5).foreach(println)

m99 +++$+++ indiana jones and the temple of doom +++$+++ 1984 +++$+++ 7.50 +++$+++ 112054 +++$+++ ['action', 'adventure'] m98 +++$+++ indiana jones and the last crusade +++$+++ 1989 +++$+++ 8.30 +++$+++ 174947 +++$+++ ['action', 'adventure', 'thriller', 'action', 'adventure', 'fantasy'] m97 +++$+++ independence day +++$+++ 1996 +++$+++ 6.60 +++$+++ 151698 +++$+++ ['action', 'adventure', 'sci-fi', 'thriller'] m96 +++$+++ invaders from mars +++$+++ 1953 +++$+++ 6.40 +++$+++ 2115 +++$+++ ['horror', 'sci-fi'] m95 +++$+++ i am legend +++$+++ 2007 +++$+++ 7.10 +++$+++ 156084 +++$+++ ['drama', 'sci-fi', 'thriller'] moviesMetaDataRaw: org.apache.spark.rdd.RDD[String] = dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_titles_metadata.txt MapPartitionsRDD[73] at textFile at <console>:33

moviesMetaDataRaw.count() // number of movies

res4: Long = 617

import scala.util.{Failure, Success}

/* - contains information about each movie title

- fields:

- movieID,

- movie title,

- movie year,

- IMDB rating,

- no. IMDB votes,

- genres in the format ['genre1','genre2',...,'genreN']

*/

val regexMovieMetaData = """\s*(\w+)\s+(\+{3}\$\+{3})\s*(.+)\s+(\2)\s+(.+)\s+(\2)\s+(.+)\s+(\2)\s+(.+)\s+(\2)\s+(\[.*\]\s*$)""".r

case class lineInMovieMetaData(movieID: String, movieTitle: String, movieYear: String, IMDBRating: String, NumIMDBVotes: String, genres: String)

val moviesMetaDataRaw = sc.textFile("dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_titles_metadata.txt")

.map(line =>

{

val pLine = regexMovieMetaData.findFirstMatchIn(line)

.map(m => lineInMovieMetaData(m.group(1), m.group(3), m.group(5), m.group(7), m.group(9), m.group(11)))

match {

case Some(l) => Success(l)

case None => Failure(new Exception(s"Non matching input: $line"))

}

pLine

}

)

import scala.util.{Failure, Success} regexMovieMetaData: scala.util.matching.Regex = \s*(\w+)\s+(\+{3}\$\+{3})\s*(.+)\s+(\2)\s+(.+)\s+(\2)\s+(.+)\s+(\2)\s+(.+)\s+(\2)\s+(\[.*\]\s*$) defined class lineInMovieMetaData moviesMetaDataRaw: org.apache.spark.rdd.RDD[Product with Serializable with scala.util.Try[lineInMovieMetaData]] = MapPartitionsRDD[79] at map at <console>:49

moviesMetaDataRaw.count

res5: Long = 617

moviesMetaDataRaw.filter(x => x.isSuccess).count()

res6: Long = 617

moviesMetaDataRaw.filter(x => x.isSuccess).take(10).foreach(println)

Success(lineInMovieMetaData(m0,10 things i hate about you,1999,6.90,62847,['comedy', 'romance'])) Success(lineInMovieMetaData(m1,1492: conquest of paradise,1992,6.20,10421,['adventure', 'biography', 'drama', 'history'])) Success(lineInMovieMetaData(m2,15 minutes,2001,6.10,25854,['action', 'crime', 'drama', 'thriller'])) Success(lineInMovieMetaData(m3,2001: a space odyssey,1968,8.40,163227,['adventure', 'mystery', 'sci-fi'])) Success(lineInMovieMetaData(m4,48 hrs.,1982,6.90,22289,['action', 'comedy', 'crime', 'drama', 'thriller'])) Success(lineInMovieMetaData(m5,the fifth element,1997,7.50,133756,['action', 'adventure', 'romance', 'sci-fi', 'thriller'])) Success(lineInMovieMetaData(m6,8mm,1999,6.30,48212,['crime', 'mystery', 'thriller'])) Success(lineInMovieMetaData(m7,a nightmare on elm street 4: the dream master,1988,5.20,13590,['fantasy', 'horror', 'thriller'])) Success(lineInMovieMetaData(m8,a nightmare on elm street: the dream child,1989,4.70,11092,['fantasy', 'horror', 'thriller'])) Success(lineInMovieMetaData(m9,the atomic submarine,1959,4.90,513,['sci-fi', 'thriller']))

//moviesMetaDataRaw.filter(x => x.isFailure).take(10).foreach(println) // to regex refine for casting

val moviesMetaData

= moviesMetaDataRaw

.filter(x => x.isSuccess)

.map { case Success(l) => l }

.toDF().select("movieID","movieTitle","movieYear")

moviesMetaData: org.apache.spark.sql.DataFrame = [movieID: string, movieTitle: string, movieYear: string]

moviesMetaData.show(10,false)

+-------+---------------------------------------------+---------+ |movieID|movieTitle |movieYear| +-------+---------------------------------------------+---------+ |m0 |10 things i hate about you |1999 | |m1 |1492: conquest of paradise |1992 | |m2 |15 minutes |2001 | |m3 |2001: a space odyssey |1968 | |m4 |48 hrs. |1982 | |m5 |the fifth element |1997 | |m6 |8mm |1999 | |m7 |a nightmare on elm street 4: the dream master|1988 | |m8 |a nightmare on elm street: the dream child |1989 | |m9 |the atomic submarine |1959 | +-------+---------------------------------------------+---------+ only showing top 10 rows

Lines Data

val linesRaw = sc.textFile("dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_lines.txt")

linesRaw: org.apache.spark.rdd.RDD[String] = dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_lines.txt MapPartitionsRDD[94] at textFile at <console>:35

linesRaw.count() // number of lines making up the conversations

res9: Long = 304713

Review first 5 lines to get a sense for the data format.

linesRaw.top(5).foreach(println)

L99999 +++$+++ u4166 +++$+++ m278 +++$+++ DULANEY +++$+++ You didn't know about it before that? L99998 +++$+++ u4168 +++$+++ m278 +++$+++ JOANNE +++$+++ To show you this. It's a letter from that lawyer, Koehler. He wrote it to me the day after I saw him. He's the one who told me I could get the money if Miss Lawson went to jail. L99997 +++$+++ u4166 +++$+++ m278 +++$+++ DULANEY +++$+++ Why'd you come here? L99996 +++$+++ u4168 +++$+++ m278 +++$+++ JOANNE +++$+++ I'm gonna go to jail. I know they're gonna make it look like I did it. They gotta put it on someone. L99995 +++$+++ u4168 +++$+++ m278 +++$+++ JOANNE +++$+++ What do you think I've got? A gun? Maybe I'm gonna kill you too. Maybe I'll blow your head off right now.

To see 5 random lines in the lines.txt evaluate the following cell.

linesRaw.takeSample(false, 5).foreach(println)

L144853 +++$+++ u4635 +++$+++ m306 +++$+++ QUINN +++$+++ M.J., I'm going to have to borrow Ruben. The alien-smuggling thing in Chinatown is going down tomorrow night and Jack's kid got hit by a car. I gotta give Ruben to Nikko. L597838 +++$+++ u8315 +++$+++ m565 +++$+++ AULON +++$+++ Yes, my lord. L107915 +++$+++ u613 +++$+++ m39 +++$+++ BOURNE +++$+++ -- it's always bad and it's never anything but bits and pieces anyway! You ever think that maybe it's just making it worse? You don't wonder that? L65662 +++$+++ u3864 +++$+++ m256 +++$+++ AUDREY +++$+++ ...Who is this? L124159 +++$+++ u4395 +++$+++ m291 +++$+++ MALE VOICE +++$+++ She's yours. What are we waiting on?

import scala.util.{Failure, Success}

/* field in line.txt are:

- lineID

- characterID (who uttered this phrase)

- movieID

- character name

- text of the utterance

*/

val regexLine = """\s*(\w+)\s+(\+{3}\$\+{3})\s*(\w+)\s+(\2)\s*(\w+)\s+(\2)\s*(.+)\s+(\2)\s*(.*$)""".r

case class lineInMovie(lineID: String, characterID: String, movieID: String, characterName: String, text: String)

val linesRaw = sc.textFile("dbfs:/datasets/sds/nlp/cornell_movie_dialogs_corpus/movie_lines.txt")

.map(line =>

{

val pLine = regexLine.findFirstMatchIn(line)

.map(m => lineInMovie(m.group(1), m.group(3), m.group(5), m.group(7), m.group(9)))

match {

case Some(l) => Success(l)

case None => Failure(new Exception(s"Non matching input: $line"))

}

pLine

}

)

import scala.util.{Failure, Success} regexLine: scala.util.matching.Regex = \s*(\w+)\s+(\+{3}\$\+{3})\s*(\w+)\s+(\2)\s*(\w+)\s+(\2)\s*(.+)\s+(\2)\s*(.*$) defined class lineInMovie linesRaw: org.apache.spark.rdd.RDD[Product with Serializable with scala.util.Try[lineInMovie]] = MapPartitionsRDD[101] at map at <console>:49

linesRaw.filter(x => x.isSuccess).count()

res11: Long = 304713

linesRaw.filter(x => x.isFailure).count()

res12: Long = 0

linesRaw.filter(x => x.isSuccess).take(5).foreach(println)

Success(lineInMovie(L1045,u0,m0,BIANCA,They do not!)) Success(lineInMovie(L1044,u2,m0,CAMERON,They do to!)) Success(lineInMovie(L985,u0,m0,BIANCA,I hope so.)) Success(lineInMovie(L984,u2,m0,CAMERON,She okay?)) Success(lineInMovie(L925,u0,m0,BIANCA,Let's go.))

Let's make a DataFrame out of the successfully parsed line.

val lines

= linesRaw

.filter(x => x.isSuccess)

.map { case Success(l) => l }

.toDF()

.join(moviesMetaData, "movieID") // and join it to get movie meta data

lines: org.apache.spark.sql.DataFrame = [movieID: string, lineID: string, characterID: string, characterName: string, text: string, movieTitle: string, movieYear: string]

lines.show(5)

+-------+-------+-----------+-------------------+--------------------+------------+---------+ |movieID| lineID|characterID| characterName| text| movieTitle|movieYear| +-------+-------+-----------+-------------------+--------------------+------------+---------+ | m124|L357776| u1889|ASSISTANT SECRETARY|Let me have it. ...|lost horizon| 1937| | m124|L357775| u1892| CLERK|Conway's gone aga...|lost horizon| 1937| | m124|L357774| u1889|ASSISTANT SECRETARY|I'll dispatch a c...|lost horizon| 1937| | m124|L357773| u1892| CLERK| Yes, sir.|lost horizon| 1937| | m124|L357772| u1889|ASSISTANT SECRETARY|Yes. Might as wel...|lost horizon| 1937| +-------+-------+-----------+-------------------+--------------------+------------+---------+ only showing top 5 rows

Dialogs with Lines

Let's join ght two DataFrames on lineID next.

val convLines = conversations.join(lines, "lineID").sort($"conversationID", $"intraConversationID")

convLines: org.apache.spark.sql.DataFrame = [lineID: string, conversationID: bigint, intraConversationID: int, movieID: string, characterID: string, characterName: string, text: string, movieTitle: string, movieYear: string]

convLines.count

res15: Long = 304713

conversations.count

res16: Long = 304713

display(convLines)

| lineID | conversationID | intraConversationID | movieID | characterID | characterName | text | movieTitle | movieYear |

|---|---|---|---|---|---|---|---|---|

| L194 | 0.0 | 0.0 | m0 | u0 | BIANCA | Can we make this quick? Roxanne Korrine and Andrew Barrett are having an incredibly horrendous public break- up on the quad. Again. | 10 things i hate about you | 1999 |

| L195 | 0.0 | 1.0 | m0 | u2 | CAMERON | Well, I thought we'd start with pronunciation, if that's okay with you. | 10 things i hate about you | 1999 |

| L196 | 0.0 | 2.0 | m0 | u0 | BIANCA | Not the hacking and gagging and spitting part. Please. | 10 things i hate about you | 1999 |

| L197 | 0.0 | 3.0 | m0 | u2 | CAMERON | Okay... then how 'bout we try out some French cuisine. Saturday? Night? | 10 things i hate about you | 1999 |

| L198 | 1.0 | 0.0 | m0 | u0 | BIANCA | You're asking me out. That's so cute. What's your name again? | 10 things i hate about you | 1999 |

| L199 | 1.0 | 1.0 | m0 | u2 | CAMERON | Forget it. | 10 things i hate about you | 1999 |

| L200 | 2.0 | 0.0 | m0 | u0 | BIANCA | No, no, it's my fault -- we didn't have a proper introduction --- | 10 things i hate about you | 1999 |

| L201 | 2.0 | 1.0 | m0 | u2 | CAMERON | Cameron. | 10 things i hate about you | 1999 |

| L202 | 2.0 | 2.0 | m0 | u0 | BIANCA | The thing is, Cameron -- I'm at the mercy of a particularly hideous breed of loser. My sister. I can't date until she does. | 10 things i hate about you | 1999 |

| L203 | 2.0 | 3.0 | m0 | u2 | CAMERON | Seems like she could get a date easy enough... | 10 things i hate about you | 1999 |

| L204 | 3.0 | 0.0 | m0 | u2 | CAMERON | Why? | 10 things i hate about you | 1999 |

| L205 | 3.0 | 1.0 | m0 | u0 | BIANCA | Unsolved mystery. She used to be really popular when she started high school, then it was just like she got sick of it or something. | 10 things i hate about you | 1999 |

| L206 | 3.0 | 2.0 | m0 | u2 | CAMERON | That's a shame. | 10 things i hate about you | 1999 |

| L207 | 4.0 | 0.0 | m0 | u0 | BIANCA | Gosh, if only we could find Kat a boyfriend... | 10 things i hate about you | 1999 |

| L208 | 4.0 | 1.0 | m0 | u2 | CAMERON | Let me see what I can do. | 10 things i hate about you | 1999 |

| L271 | 5.0 | 0.0 | m0 | u0 | BIANCA | C'esc ma tete. This is my head | 10 things i hate about you | 1999 |

| L272 | 5.0 | 1.0 | m0 | u2 | CAMERON | Right. See? You're ready for the quiz. | 10 things i hate about you | 1999 |

| L273 | 5.0 | 2.0 | m0 | u0 | BIANCA | I don't want to know how to say that though. I want to know useful things. Like where the good stores are. How much does champagne cost? Stuff like Chat. I have never in my life had to point out my head to someone. | 10 things i hate about you | 1999 |

| L274 | 5.0 | 3.0 | m0 | u2 | CAMERON | That's because it's such a nice one. | 10 things i hate about you | 1999 |

| L275 | 5.0 | 4.0 | m0 | u0 | BIANCA | Forget French. | 10 things i hate about you | 1999 |

| L276 | 6.0 | 0.0 | m0 | u0 | BIANCA | How is our little Find the Wench A Date plan progressing? | 10 things i hate about you | 1999 |

| L277 | 6.0 | 1.0 | m0 | u2 | CAMERON | Well, there's someone I think might be -- | 10 things i hate about you | 1999 |

| L280 | 7.0 | 0.0 | m0 | u2 | CAMERON | There. | 10 things i hate about you | 1999 |

| L281 | 7.0 | 1.0 | m0 | u0 | BIANCA | Where? | 10 things i hate about you | 1999 |

| L363 | 8.0 | 0.0 | m0 | u2 | CAMERON | You got something on your mind? | 10 things i hate about you | 1999 |

| L364 | 8.0 | 1.0 | m0 | u0 | BIANCA | I counted on you to help my cause. You and that thug are obviously failing. Aren't we ever going on our date? | 10 things i hate about you | 1999 |

| L365 | 9.0 | 0.0 | m0 | u2 | CAMERON | You have my word. As a gentleman | 10 things i hate about you | 1999 |

| L366 | 9.0 | 1.0 | m0 | u0 | BIANCA | You're sweet. | 10 things i hate about you | 1999 |

| L367 | 10.0 | 0.0 | m0 | u2 | CAMERON | How do you get your hair to look like that? | 10 things i hate about you | 1999 |

| L368 | 10.0 | 1.0 | m0 | u0 | BIANCA | Eber's Deep Conditioner every two days. And I never, ever use a blowdryer without the diffuser attachment. | 10 things i hate about you | 1999 |

Truncated to 30 rows

Let's amalgamate the texts utered in the same conversations together.

By doing this we loose all the information in the order of utterance.

But this is fine as we are going to do LDA with just the first-order information of words uttered in each conversation by anyone involved in the dialogue.

import org.apache.spark.sql.functions.{collect_list, udf, lit, concat_ws}

val corpusDF = convLines.groupBy($"conversationID",$"movieID")

.agg(concat_ws(" :-()-: ",collect_list($"text")).alias("corpus"))

.join(moviesMetaData, "movieID") // and join it to get movie meta data

.select($"conversationID".as("id"),$"corpus",$"movieTitle",$"movieYear")

.cache()

import org.apache.spark.sql.functions.{collect_list, udf, lit, concat_ws} corpusDF: org.apache.spark.sql.DataFrame = [id: bigint, corpus: string, movieTitle: string, movieYear: string]

corpusDF.count()

res18: Long = 83097

corpusDF.take(5).foreach(println)

[17668,This would be funny - if it wasn't so pathetic. Why, she isn't a day over twenty! :-()-: You're wrong, George. :-()-: I'm not wrong. She told me so. Besides, she wouldn't have to tell me. I'd know anyway. I found out a lot of things last night. I'm not ashamed of it either. It's probably one of the few decent things that's ever happened in this hellish place.,lost horizon,1937] [17598,Cave, eh? Where? :-()-: Over by that hill.,lost horizon,1937] [17663,Something grand and beautiful, George. Something I've been searching for all my life. The answer to the confusion and bewilderment of a lifetime. I've found it, George, and I can't leave it. You mustn't either. :-()-: I don't know what you're talking about. You're carrying around a secret that seems to be eating you up. If you'll only tell me about it. :-()-: I will, George. I want to tell you. I'll burst with it if I don't. It's weird and fantastical and sometimes unbelievable, but so beautiful! Well, as you know, we were kidnapped and brought here . . .,lost horizon,1937] [17593,You see? You get the idea? From this reservoir here I can pipe in the whole works. Oh, I'm going to get a great kick out of this. Of course it's just to keep my hand in, but with the equipment we have here, I can put a plumbing system in for the whole village down there. Can rig it up in no time. Do you realize those poor people are still going to the well for water? :-()-: It's unbelievable. :-()-: Think of it! In times like these. :-()-: Say, what about that gold deal? :-()-: Huh? :-()-: Gold. You were going to� :-()-: Oh - that! That can wait. Nobody's going to run off with it. Say, I've got to get busy. I want to show this whole layout to Chang. So long. Don't you take any wooden nickels. :-()-: All right.,lost horizon,1937] [17658,Let me up! Let me up! :-()-: All right. Sorry, George.,lost horizon,1937]

display(corpusDF)

| id | corpus | movieTitle | movieYear |

|---|---|---|---|

| 17668.0 | This would be funny - if it wasn't so pathetic. Why, she isn't a day over twenty! :-()-: You're wrong, George. :-()-: I'm not wrong. She told me so. Besides, she wouldn't have to tell me. I'd know anyway. I found out a lot of things last night. I'm not ashamed of it either. It's probably one of the few decent things that's ever happened in this hellish place. | lost horizon | 1937 |

| 17598.0 | Cave, eh? Where? :-()-: Over by that hill. | lost horizon | 1937 |

| 17663.0 | Something grand and beautiful, George. Something I've been searching for all my life. The answer to the confusion and bewilderment of a lifetime. I've found it, George, and I can't leave it. You mustn't either. :-()-: I don't know what you're talking about. You're carrying around a secret that seems to be eating you up. If you'll only tell me about it. :-()-: I will, George. I want to tell you. I'll burst with it if I don't. It's weird and fantastical and sometimes unbelievable, but so beautiful! Well, as you know, we were kidnapped and brought here . . . | lost horizon | 1937 |

| 17593.0 | You see? You get the idea? From this reservoir here I can pipe in the whole works. Oh, I'm going to get a great kick out of this. Of course it's just to keep my hand in, but with the equipment we have here, I can put a plumbing system in for the whole village down there. Can rig it up in no time. Do you realize those poor people are still going to the well for water? :-()-: It's unbelievable. :-()-: Think of it! In times like these. :-()-: Say, what about that gold deal? :-()-: Huh? :-()-: Gold. You were going to� :-()-: Oh - that! That can wait. Nobody's going to run off with it. Say, I've got to get busy. I want to show this whole layout to Chang. So long. Don't you take any wooden nickels. :-()-: All right. | lost horizon | 1937 |

| 17658.0 | Let me up! Let me up! :-()-: All right. Sorry, George. | lost horizon | 1937 |

| 17610.0 | That would suit me perfectly. I'm always broke. How did you pay for them? :-()-: Our Valley is very rich in a metal called gold, which fortunately for us is valued very highly in the outside world. So we merely . . . :-()-: �buy and sell? :-()-: Buy and - sell? No, no, pardon me, exchange . :-()-: I see. Gold for ideas. You know Mr. Chang, there's something so simple and naive about all of this that I suspect there has been a shrewd, guiding intelligence somewhere. Whose idea was it? How did it all start? :-()-: That, my dear Conway, is the story of a remarkable man. :-()-: Who? :-()-: A Belgian priest by the name of Father Perrault, the first European to find this place, and a very great man indeed. He is responsible for everything you see here. He built Shangri-La, taught our natives, and began our collection of art. In fact, Shangri-La is Father Perrault. :-()-: When was all this? :-()-: Oh, let me see - way back in 1713, I think it was, that Father Perrault stumbled into the Valley, half frozen to death. It was typical of the man that, one leg being frozen, and of course there being no doctors here, he amputated the leg himself. :-()-: He amputated his own leg? :-()-: Yes. Oddly enough, later, when he had learned to understand their language, the natives told him he could have saved his leg. It would have healed without amputation. :-()-: Well, they didn't actually mean that. :-()-: Yes, yes. They were very sincere about it too. You see, a perfect body in perfect health is the rule here. They've never known anything different. So what was true for them they thought would naturally be true for anyone else living here. :-()-: Well, is it? :-()-: Rather astonishingly so, yes. And particularly so in the case of Father Perrault himself. Do you know when he and the natives were finished building Shangri-La, he was 108 years old and still very active, in spite of only having one leg? :-()-: 108 and still active? :-()-: You're startled? :-()-: Oh, no. Just a little bowled over, that's all. :-()-: Forgive me. I should have told you it is quite common here to live to a very ripe old age. Climate, diet, mountain water, you might say. But we like to believe it is the absence of struggle in the way we live. In your countries, on the other hand, how often do you hear the expression, "He worried himself to death?" or, "This thing or that killed him?" :-()-: Very often. :-()-: And very true. Your lives are therefore, as a rule, shorter, not so much by natural death as by indirect suicide. :-()-: That's all very fine if it works out. A little amazing, of course. :-()-: Why, Mr. Conway, you surprise me! :-()-: I surprise you? Now that's news. :-()-: I mean, your amazement. I could have understood it in any of your companions, but you - who have dreamed and written so much about better worlds. Or is it that you fail to recognize one of your own dreams when you see it? :-()-: Mr. Chang, if you don't mind, I think I'll go on being amazed - in moderation, of course. :-()-: Then everything is quite all right, isn't it? | lost horizon | 1937 |

| 17648.0 | What are these people? :-()-: I don't know. I can't get the dialect. | lost horizon | 1937 |

| 17562.0 | I didn't care for 'sister' last night, and I don't like 'Lovey' this morning. My name is Lovett - Alexander, P. :-()-: I see. :-()-: I see. :-()-: Well, it's a good morning, anyway. :-()-: I'm never conversational before I coffee. | lost horizon | 1937 |

| 17681.0 | Huh? I give it up. But this not knowing where you're going is exciting anyway. :-()-: Well, Mr. Conway, for a man who is supposed to be a leader, your do- nothing attitude is very disappointing. | lost horizon | 1937 |

| 17573.0 | How about you Lovey? Come on. Let's you and I play a game of honeymoon bridge. :-()-: I'm thinking. :-()-: Thinking? What about some double solitaire? :-()-: As a matter of fact, I'm very good at double solitaire. :-()-: No kidding? :-()-: Yes. :-()-: Then I'm your man. Come on, Toots. | lost horizon | 1937 |

| 17638.0 | The power house - they've blown it up! The planes can't land without lights. :-()-: Come on! We'll burn the hangar. That will make light for them! | lost horizon | 1937 |

| 17622.0 | In that event, we better make arrangements to get some porters immediately. Some means to get us back to civilization. :-()-: Are you so certain you are away from it? :-()-: As far away as I ever want to be. :-()-: Oh, dear. | lost horizon | 1937 |

| 17660.0 | For heaven's sake, Bob, what's the matter with you? You went out there for the purpose of� :-()-: George. George - do you mind? I'm sorry, but I can't talk about it tonight. | lost horizon | 1937 |

| 17633.0 | That Conway seemed to belong here. In fact, it was suggested that someone be sent to bring him here. :-()-: That I be brought here? Who had that brilliant idea? :-()-: Sondra Bizet. :-()-: Oh, the girl at the piano? :-()-: Yes. She has read your books and has a profound admiration for you, as have we all. :-()-: Of course I have suspected that our being here is no accident. Furthermore, I have a feeling that we're never supposed to leave. But that, for the moment, doesn't concern me greatly. I'll meet that when it comes. What particularly interests me at present is, why was I brought here? What possible use can I be to an already thriving community? :-()-: We need men like you here, to be sure that our community will continue to thrive. In return for which, Shangri-La has much to give you. You are still, by the world's standards, a youngish man. Yet in the normal course of existence, you can expect twenty or thirty years of gradually diminishing activity. Here, however, in Shangri- La, by our standards your life has just begun, and may go on and on. :-()-: But to be candid, Father, a prolonged future doesn't excite me. It would have to have a point. I've sometimes doubted whether life itself has any. And if that is so, then long life must be even more pointless. No, I'd need a much more definite reason for going on and on. :-()-: We have reason. It is the entire meaning and purpose of Shangri-La. It came to me in a vision, long, long, ago. I saw all the nations strengthening, not in wisdom, but in the vulgar passions and the will to destroy. I saw their machine power multiply until a single weaponed man might match a whole army. I foresaw a time when man, exulting in the technique of murder, would rage so hotly over the world that every book, every treasure, would be doomed to destruction. This vision was so vivid and so moving that I determined to gather together all the things of beauty and culture that I could and preserve them here against the doom toward which the world is rushing. Look at the world today! Is there anything more pitiful? What madness there is, what blindness, what unintelligent leadership! A scurrying mass of bewildered humanity crashing headlong against each other, propelled by an orgy of greed and brutality. The time must come, my friend, when this orgy will spend itself, when brutality and the lust for power must perish by its own sword. Against that time is why I avoided death and am here, and why you were brought here. For when that day comes, the world must begin to look for a new life. And it is our hope that they may find it here. For here we shall be with their books and their music and a way of life based on one simple rule: Be Kind. When that day comes, it is our hope that the brotherly love of Shangri-La will spread throughout the world. Yes, my son, when the strong have devoured each other, the Christian ethic may at last be fulfilled, and the meek shall inherit the earth. | lost horizon | 1937 |

| 17628.0 | Are you taking me? :-()-: Yes, of course. Certainly. Come on! | lost horizon | 1937 |

| 17601.0 | And mine's Conway. :-()-: How do you do? :-()-: You've no idea, sir, how unexpected and very welcome you are. My friends and I - and the lady in the plane - left Baskul night before last for Shanghai, but we suddenly found ourselves traveling in the opposite direction� | lost horizon | 1937 |

| 17650.0 | What is it? Has he fainted? :-()-: It looks like it. Smell those fumes? | lost horizon | 1937 |

| 17634.0 | Yes, of course, your brother is a problem. It was to be expected. :-()-: I knew you'd understand. That's why I came to you for help. :-()-: You must not look to me for help. Your brother is no longer my problem. He is now your problem, Conway. :-()-: Mine? :-()-: Because, my son, I am placing in your hands the future and destiny of Shangri-La. For I am going to die. | lost horizon | 1937 |

| 17580.0 | Hey Lovey, come here! Lovey, I asked for a glass of wine and look what I got. Come on, sit down. :-()-: So that's where you are. I might of known it. No wonder you couldn't hear me. :-()-: You were asked to have a glass of wine. Sit down! :-()-: And be poisoned out here in the open? :-()-: Certainly not! | lost horizon | 1937 |

| 17699.0 | Why, he's speaking English. :-()-: English! | lost horizon | 1937 |

| 17645.0 | Don't worry, George. Nothing's going to happen. I'll fall right into line. I'll be the good little boy that everybody wants me to be. I'll be the best little Foreign Secretary we ever had, just because I haven't the nerve to be anything else. :-()-: Do try to sleep, Bob. :-()-: Huh? Oh, sure, Freshie. Good thing, sleep. | lost horizon | 1937 |

| 17564.0 | He might have lost his way. :-()-: Of course. That's what I told them last night. You can't expect a man to sail around in the dark.[5] During this George has been looking around - he rises. | lost horizon | 1937 |

| 17570.0 | Yeah? If this be execution, lead me to it. :-()-: That's what they do with cattle just before the slaughter. Fatten them. :-()-: Uh-huh. You're a scream, Lovey. :-()-: Please don't call me Lovey. | lost horizon | 1937 |

| 17646.0 | Oh, stop it! :-()-: The bloke up there looks a Chinese, or a Mongolian, or something. | lost horizon | 1937 |

| 17587.0 | It's better than freezing to death down below, isn't it? :-()-: I'll say. | lost horizon | 1937 |

| 17652.0 | What is it? :-()-: See that spot? :-()-: Yes. :-()-: That's where we were this morning. He had it marked. Right on the border of Tibet. Here's where civilization ends. We must be a thousand miles beyond it - just a blank on the map. :-()-: What's it mean? :-()-: It means we're in unexplored country - country nobody ever reached. | lost horizon | 1937 |

| 17582.0 | �then the bears came right into the bedroom and the little baby bear said, "Oh, somebody's been sleeping in my bed." And then the mama bear said, "Oh dear, somebody's been sleeping in my bed!" And then the big papa bear, he roared, "And somebody's been sleeping in my bed!" Well, you have to admit the poor little bears were in a quandary! :-()-: I'm going to sleep in my bed. Come on, Lovey! :-()-: They were in a quandary, and� :-()-: Come on, Lovey. :-()-: Why? Why 'come on' all the time? What's the matter? Are you going to be a fuss budget all your life? Here, drink it up! Aren't you having any fun? Where was I? :-()-: In a quandary. | lost horizon | 1937 |

| 17647.0 | George, what are you going to do? :-()-: I'm going to drag him out and force him to tell us what his game is. | lost horizon | 1937 |

| 17577.0 | Yes. :-()-: Sounds like a stall to me. | lost horizon | 1937 |

| 17642.0 | Just what I needed too. :-()-: You? :-()-: Just this once, Bob. I feel like celebrating. Just think of it, Bob - a cruiser sent to Shanghai just to take you back to England. You know what it means. Here you are. Don't bother about those cables now. I want you to drink with me. Gentlemen, I give you Robert Conway - England's new Foreign Secretary. | lost horizon | 1937 |

Truncated to 30 rows

Feature extraction and transformation APIs

We will use the convenient Feature extraction and transformation APIs.

Step 3. Text Tokenization

We will use the RegexTokenizer to split each document into tokens. We can setMinTokenLength() here to indicate a minimum token length, and filter away all tokens that fall below the minimum. See:

import org.apache.spark.ml.feature.RegexTokenizer

// Set params for RegexTokenizer

val tokenizer = new RegexTokenizer()

.setPattern("[\\W_]+") // break by white space character(s)

.setMinTokenLength(4) // Filter away tokens with length < 4

.setInputCol("corpus") // name of the input column

.setOutputCol("tokens") // name of the output column

// Tokenize document

val tokenized_df = tokenizer.transform(corpusDF)

import org.apache.spark.ml.feature.RegexTokenizer tokenizer: org.apache.spark.ml.feature.RegexTokenizer = regexTok_610667693de3 tokenized_df: org.apache.spark.sql.DataFrame = [id: bigint, corpus: string, movieTitle: string, movieYear: string, tokens: array<string>]

display(tokenized_df.sample(false,0.001,1234L))

display(tokenized_df.sample(false,0.001,1234L).select("tokens"))

Step 4. Remove Stopwords

We can easily remove stopwords using the StopWordsRemover(). See:

If a list of stopwords is not provided, the StopWordsRemover() will use this list of stopwords, also shown below, by default.

are,around,as,at,back,be,became,because,become,becomes,becoming,been,before,beforehand,behind,being,below,beside,besides,between,beyond,bill,both,bottom,but,by,call,can,cannot,cant,co,computer,con,could,

couldnt,cry,de,describe,detail,do,done,down,due,during,each,eg,eight,either,eleven,else,elsewhere,empty,enough,etc,even,ever,every,everyone,everything,everywhere,except,few,fifteen,fify,fill,find,fire,first,

five,for,former,formerly,forty,found,four,from,front,full,further,get,give,go,had,has,hasnt,have,he,hence,her,here,hereafter,hereby,herein,hereupon,hers,herself,him,himself,his,how,however,hundred,i,ie,if,

in,inc,indeed,interest,into,is,it,its,itself,keep,last,latter,latterly,least,less,ltd,made,many,may,me,meanwhile,might,mill,mine,more,moreover,most,mostly,move,much,must,my,myself,name,namely,neither,never,

nevertheless,next,nine,no,nobody,none,noone,nor,not,nothing,now,nowhere,of,off,often,on,once,one,only,onto,or,other,others,otherwise,our,ours,ourselves,out,over,own,part,per,perhaps,please,put,rather,re,same,

see,seem,seemed,seeming,seems,serious,several,she,should,show,side,since,sincere,six,sixty,so,some,somehow,someone,something,sometime,sometimes,somewhere,still,such,system,take,ten,than,that,the,their,them,

themselves,then,thence,there,thereafter,thereby,therefore,therein,thereupon,these,they,thick,thin,third,this,those,though,three,through,throughout,thru,thus,to,together,too,top,toward,towards,twelve,twenty,two,

un,under,until,up,upon,us,very,via,was,we,well,were,what,whatever,when,whence,whenever,where,whereafter,whereas,whereby,wherein,whereupon,wherever,whether,which,while,whither,who,whoever,whole,whom,whose,why,will,

with,within,without,would,yet,you,your,yours,yourself,yourselves

You can use getStopWords() to see the list of stopwords that will be used.

In this example, we will specify a list of stopwords for the StopWordsRemover() to use. We do this so that we can add on to the list later on.

display(dbutils.fs.ls("dbfs:/tmp/stopwords")) // check if the file already exists from earlier wget and dbfs-load

| path | name | size |

|---|---|---|

| dbfs:/tmp/stopwords | stopwords | 2237.0 |

If the file dbfs:/tmp/stopwords already exists then skip the next two cells, otherwise download and load it into DBFS by uncommenting and evaluating the next two cells.

//%sh wget http://ir.dcs.gla.ac.uk/resources/linguistic_utils/stop_words -O /tmp/stopwords # uncomment '//' at the beginning and repeat only if needed again

--2016-04-07 00:21:20-- http://ir.dcs.gla.ac.uk/resources/linguistic_utils/stop_words Resolving ir.dcs.gla.ac.uk (ir.dcs.gla.ac.uk)... 130.209.240.253 Connecting to ir.dcs.gla.ac.uk (ir.dcs.gla.ac.uk)|130.209.240.253|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 2237 (2.2K) [text/plain] Saving to: '/tmp/stopwords' 0K .. 100% 310M=0s 2016-04-07 00:21:21 (310 MB/s) - '/tmp/stopwords' saved [2237/2237]

//%fs cp file:/tmp/stopwords dbfs:/tmp/stopwords # uncomment '//' at the beginning and repeat only if needed again

res31: Boolean = true

// List of stopwords

val stopwords = sc.textFile("/tmp/stopwords").collect()

stopwords: Array[String] = Array(a, about, above, across, after, afterwards, again, against, all, almost, alone, along, already, also, although, always, am, among, amongst, amoungst, amount, an, and, another, any, anyhow, anyone, anything, anyway, anywhere, are, around, as, at, back, be, became, because, become, becomes, becoming, been, before, beforehand, behind, being, below, beside, besides, between, beyond, bill, both, bottom, but, by, call, can, cannot, cant, co, computer, con, could, couldnt, cry, de, describe, detail, do, done, down, due, during, each, eg, eight, either, eleven, else, elsewhere, empty, enough, etc, even, ever, every, everyone, everything, everywhere, except, few, fifteen, fify, fill, find, fire, first, five, for, former, formerly, forty, found, four, from, front, full, further, get, give, go, had, has, hasnt, have, he, hence, her, here, hereafter, hereby, herein, hereupon, hers, herself, him, himself, his, how, however, hundred, i, ie, if, in, inc, indeed, interest, into, is, it, its, itself, keep, last, latter, latterly, least, less, ltd, made, many, may, me, meanwhile, might, mill, mine, more, moreover, most, mostly, move, much, must, my, myself, name, namely, neither, never, nevertheless, next, nine, no, nobody, none, noone, nor, not, nothing, now, nowhere, of, off, often, on, once, one, only, onto, or, other, others, otherwise, our, ours, ourselves, out, over, own, part, per, perhaps, please, put, rather, re, same, see, seem, seemed, seeming, seems, serious, several, she, should, show, side, since, sincere, six, sixty, so, some, somehow, someone, something, sometime, sometimes, somewhere, still, such, system, take, ten, than, that, the, their, them, themselves, then, thence, there, thereafter, thereby, therefore, therein, thereupon, these, they, thick, thin, third, this, those, though, three, through, throughout, thru, thus, to, together, too, top, toward, towards, twelve, twenty, two, un, under, until, up, upon, us, very, via, was, we, well, were, what, whatever, when, whence, whenever, where, whereafter, whereas, whereby, wherein, whereupon, wherever, whether, which, while, whither, who, whoever, whole, whom, whose, why, will, with, within, without, would, yet, you, your, yours, yourself, yourselves)

stopwords.length // find the number of stopwords in the scala Array[String]

res23: Int = 319

Finally, we can just remove the stopwords using the StopWordsRemover as follows:

import org.apache.spark.ml.feature.StopWordsRemover

// Set params for StopWordsRemover

val remover = new StopWordsRemover()

.setStopWords(stopwords) // This parameter is optional

.setInputCol("tokens")

.setOutputCol("filtered")

// Create new DF with Stopwords removed

val filtered_df = remover.transform(tokenized_df)

import org.apache.spark.ml.feature.StopWordsRemover remover: org.apache.spark.ml.feature.StopWordsRemover = stopWords_bcdc59bf2ac8 filtered_df: org.apache.spark.sql.DataFrame = [id: bigint, corpus: string, movieTitle: string, movieYear: string, tokens: array<string>, filtered: array<string>]

Step 5. Vector of Token Counts

LDA takes in a vector of token counts as input. We can use the CountVectorizer() to easily convert our text documents into vectors of token counts.

The CountVectorizer will return (VocabSize, Array(Indexed Tokens), Array(Token Frequency)).

Two handy parameters to note:

setMinDF: Specifies the minimum number of different documents a term must appear in to be included in the vocabulary.setMinTF: Specifies the minimum number of times a term has to appear in a document to be included in the vocabulary.

See:

import org.apache.spark.ml.feature.CountVectorizer

// Set params for CountVectorizer

val vectorizer = new CountVectorizer()

.setInputCol("filtered")

.setOutputCol("features")

.setVocabSize(10000)

.setMinDF(5) // the minimum number of different documents a term must appear in to be included in the vocabulary.

.fit(filtered_df)

import org.apache.spark.ml.feature.CountVectorizer vectorizer: org.apache.spark.ml.feature.CountVectorizerModel = cntVec_daa8106c510f

// Create vector of token counts

val countVectors = vectorizer.transform(filtered_df).select("id", "features")

countVectors: org.apache.spark.sql.DataFrame = [id: bigint, features: vector]

// see the first countVectors

countVectors.take(1)

res24: Array[org.apache.spark.sql.Row] = Array([17668,(10000,[0,9,32,33,37,45,56,63,71,79,124,235,293,1562,1660,1899],[1.0,1.0,1.0,2.0,1.0,1.0,1.0,2.0,1.0,1.0,1.0,1.0,1.0,1.0,1.0,1.0])])

To use the LDA algorithm in the MLlib library, we have to convert the DataFrame back into an RDD.

// Convert DF to RDD

import org.apache.spark.mllib.linalg.Vector

val lda_countVector = countVectors.map { case Row(id: Long, countVector: Vector) => (id, countVector) }

import org.apache.spark.mllib.linalg.Vector lda_countVector: org.apache.spark.rdd.RDD[(Long, org.apache.spark.mllib.linalg.Vector)] = MapPartitionsRDD[305] at map at <console>:86

// format: Array(id, (VocabSize, Array(indexedTokens), Array(Token Frequency)))

lda_countVector.take(1)

res25: Array[(Long, org.apache.spark.mllib.linalg.Vector)] = Array((17668,(10000,[0,9,32,33,37,45,56,63,71,79,124,235,293,1562,1660,1899],[1.0,1.0,1.0,2.0,1.0,1.0,1.0,2.0,1.0,1.0,1.0,1.0,1.0,1.0,1.0,1.0])))

Let's get an overview of LDA in Spark's MLLIB

See:

Create LDA model with Online Variational Bayes

We will now set the parameters for LDA. We will use the OnlineLDAOptimizer() here, which implements Online Variational Bayes.

Choosing the number of topics for your LDA model requires a bit of domain knowledge. As we do not know the number of "topics", we will set numTopics to be 20.

val numTopics = 20

numTopics: Int = 20

We will set the parameters needed to build our LDA model. We can also setMiniBatchFraction for the OnlineLDAOptimizer, which sets the fraction of corpus sampled and used at each iteration. In this example, we will set this to 0.8.

import org.apache.spark.mllib.clustering.{LDA, OnlineLDAOptimizer}

// Set LDA params

val lda = new LDA()

.setOptimizer(new OnlineLDAOptimizer().setMiniBatchFraction(0.8))

.setK(numTopics)

.setMaxIterations(3)

.setDocConcentration(-1) // use default values

.setTopicConcentration(-1) // use default values

import org.apache.spark.mllib.clustering.{LDA, OnlineLDAOptimizer} lda: org.apache.spark.mllib.clustering.LDA = org.apache.spark.mllib.clustering.LDA@1d493912

Create the LDA model with Online Variational Bayes.

val ldaModel = lda.run(lda_countVector)

ldaModel: org.apache.spark.mllib.clustering.LDAModel = org.apache.spark.mllib.clustering.LocalLDAModel@3945c264

Watch Online Learning for Latent Dirichlet Allocation in NIPS2010 by Matt Hoffman (right click and open in new tab)

Also see the paper on Online varioational Bayes by Matt linked for more details (from the above URL): http://videolectures.net/site/normaldl/tag=83534/nips20101291.pdf

Note that using the OnlineLDAOptimizer returns us a LocalLDAModel, which stores the inferred topics of your corpus.

Review Topics

We can now review the results of our LDA model. We will print out all 20 topics with their corresponding term probabilities.

Note that you will get slightly different results every time you run an LDA model since LDA includes some randomization.

Let us review results of LDA model with Online Variational Bayes, step by step.

val topicIndices = ldaModel.describeTopics(maxTermsPerTopic = 5)

topicIndices: Array[(Array[Int], Array[Double])] = Array((Array(4, 1, 9, 2, 8),Array(0.0015151627605402241, 0.001486317713687992, 0.0011681552786581887, 0.0010054261952954218, 8.615063157995299E-4)), (Array(1, 5, 15, 13, 14),Array(0.0019868644391922855, 0.0012407287861833166, 0.001222938318274118, 8.919429867442377E-4, 8.877996244770699E-4)), (Array(5, 3, 28, 9, 30),Array(0.0021699398068533824, 0.0011749233194760728, 9.013899165935946E-4, 8.504816848342215E-4, 8.252271581728695E-4)), (Array(5, 16, 1, 6, 2),Array(0.0052403657467525845, 0.0042033140402619375, 0.003763558294118634, 0.003396547452689663, 0.003021645305713142)), (Array(3, 4, 0, 1, 9),Array(0.0033139517864565287, 0.0021697727562965366, 0.0021377421844192557, 0.0018117462306041533, 0.0016275001664372737)), (Array(0, 1, 2, 22, 4),Array(0.0023854706148061567, 0.0019261905458742943, 0.0016576667581656617, 0.0011917968671869056, 0.0011248880469085086)), (Array(0, 4, 2, 1, 11),Array(0.004655511405730442, 0.002458768397723486, 0.0021078794201011583, 0.0017387793236628348, 0.0014236215935311085)), (Array(0, 1, 3, 6, 8),Array(0.0018921794022548181, 0.0018483719649855822, 0.0014386272597831232, 0.0014182374956709625, 0.0013764615715091382)), (Array(2, 10, 7, 12, 61),Array(0.0051946490753460636, 0.00495793071050275, 0.003580668739640197, 0.003184743897654473, 0.002981524063809674)), (Array(0, 2, 9, 4, 26),Array(0.0021335471974973886, 9.625379885382163E-4, 9.199660101772105E-4, 9.033959582006918E-4, 8.747265482123576E-4)), (Array(42, 121, 1, 4, 0),Array(0.0025288929149109313, 0.002359902253314637, 0.002261894922516035, 0.0018626814528567444, 0.0017576647100866413)), (Array(4, 1, 6, 0, 7),Array(0.007977613731363398, 0.0074256547675291915, 0.006054432245366723, 0.0047629498600014275, 0.004741787642644756)), (Array(0, 11, 1, 2, 7),Array(0.003854363383063756, 0.0024260532260151663, 0.0022162035474150243, 0.0018817267913487192, 0.0015001333324918243)), (Array(6, 1, 2, 70, 13),Array(0.0015776417631711249, 0.0014414685828715156, 0.0014279021959578673, 0.0012813104112466842, 8.294352348310147E-4)), (Array(1, 2, 0, 5, 23),Array(0.0036920461187951023, 0.00359354972907098, 0.002507896270867163, 0.0021850418950099, 0.0016447369107874818)), (Array(6, 0, 3, 35, 2),Array(0.002464771356929917, 0.0021840298145414266, 0.0013576445527878143, 0.0011473959400518262, 0.0011075469842802296)), (Array(4, 1, 10, 0, 5),Array(0.0019877776653926984, 0.0019088812832900985, 0.0014118885885063602, 0.0011336973397614043, 9.384609226085285E-4)), (Array(0, 2, 5, 4, 3),Array(0.003453703972841875, 0.00327608562414095, 0.0025174800837702693, 0.002218982477404188, 0.0017805786184620824)), (Array(0, 2, 1, 3, 6),Array(0.018645078429696073, 0.01154979812954807, 0.0093996276023053, 0.008649180109527853, 0.008565200297515204)), (Array(0, 1, 2, 5, 8),Array(0.019137707865734686, 0.014515895598928779, 0.012282554660302827, 0.010060286236470243, 0.009978216413893133)))

val vocabList = vectorizer.vocabulary

vocabList: Array[String] = Array(know, just, like, want, think, right, going, good, yeah, tell, come, time, look, didn, mean, make, okay, really, little, sure, gonna, thing, people, said, maybe, need, sorry, love, talk, thought, doing, life, night, things, work, money, better, told, long, help, believe, years, shit, does, away, place, hell, doesn, great, home, feel, fuck, kind, remember, dead, course, wouldn, wait, kill, guess, understand, thank, girl, wrong, leave, listen, talking, real, hear, stop, nice, happened, fine, wanted, father, gotta, mind, fucking, house, wasn, getting, world, stay, mother, left, came, care, thanks, knew, room, trying, guys, went, looking, coming, heard, friend, haven, seen, best, tonight, live, used, matter, killed, pretty, business, idea, couldn, head, miss, says, wife, called, woman, morning, tomorrow, start, stuff, saying, play, hello, baby, hard, probably, minute, days, took, somebody, today, school, meet, gone, crazy, wants, damn, forget, cause, problem, deal, case, friends, point, hope, jesus, afraid, looks, knows, year, worry, exactly, aren, half, thinking, shut, hold, wanna, face, minutes, bring, doctor, word, read, everybody, supposed, makes, story, turn, true, watch, thousand, family, brother, kids, week, happen, fuckin, working, open, happy, lost, john, hurt, town, ready, alright, late, actually, gave, married, beautiful, soon, jack, times, sleep, door, having, hand, drink, easy, gets, chance, young, trouble, different, anybody, rest, shot, hate, death, second, later, asked, phone, wish, check, quite, walk, change, police, couple, question, close, taking, heart, hours, making, comes, anymore, truth, trust, dollars, important, captain, telling, funny, person, honey, goes, eyes, reason, inside, stand, break, means, number, tried, high, white, water, suppose, body, sick, game, excuse, party, women, country, answer, waiting, christ, office, send, pick, alive, sort, blood, black, daddy, line, husband, goddamn, book, fifty, thirty, fact, million, died, hands, power, started, stupid, shouldn, months, boys, city, dinner, sense, running, hour, shoot, fight, drive, george, speak, figure, living, ship, dear, street, ahead, lady, seven, free, feeling, scared, frank, able, children, safe, moment, outside, news, president, brought, write, happens, sent, bullshit, lose, light, glad, girls, child, sister, sounds, promise, till, lives, sound, weren, save, cool, poor, shall, asking, plan, bitch, king, daughter, beat, weeks, york, cold, worth, taken, harry, needs, piece, movie, fast, possible, small, goin, straight, human, hair, company, tired, food, lucky, pull, wonderful, touch, looked, state, thinks, picture, words, leaving, control, clear, known, special, buddy, luck, order, follow, expect, mary, mouth, catch, worked, mister, learn, playing, perfect, dream, calling, questions, hospital, coffee, ride, takes, parents, miles, works, secret, hotel, explain, worse, kidding, past, outta, general, unless, felt, drop, throw, interested, hang, certainly, absolutely, earth, loved, wonder, dark, accident, seeing, clock, doin, simple, turned, date, sweet, meeting, clean, sign, feet, handle, music, report, giving, army, fucked, cops, charlie, information, smart, yesterday, fall, fault, class, bank, month, blow, major, caught, swear, paul, road, choice, talked, plane, boss, david, paid, wear, american, worried, lord, goodbye, clothes, paper, ones, terrible, strange, mistake, given, kept, hurry, blue, murder, finish, apartment, sell, middle, nothin, careful, hasn, meant, walter, moving, changed, imagine, fair, difference, quiet, happening, near, quit, marry, personal, figured, future, rose, agent, building, michael, kinda, mama, early, private, watching, trip, certain, record, busy, jimmy, broke, store, sake, longer, finally, stick, boat, born, sitting, evening, bucks, ought, lying, chief, history, honor, lunch, kiss, darling, respect, uncle, favor, fool, rich, killing, land, liked, peter, tough, brain, interesting, nick, completely, welcome, problems, radio, wake, dick, honest, cash, dance, dude, james, bout, floor, weird, court, calls, jail, window, involved, drunk, johnny, needed, officer, asshole, books, spend, situation, relax, pain, service, dangerous, grand, security, letter, stopped, realize, offer, table, bastard, message, killer, instead, jake, deep, nervous, pass, somethin, evil, english, bought, short, step, ring, machine, likes, picked, eddie, voice, carry, upset, lived, forgot, afternoon, fear, quick, finished, count, forgive, wrote, decided, totally, named, space, team, lawyer, pleasure, doubt, station, suit, gotten, bother, return, prove, pictures, slow, strong, bunch, list, wearing, driving, join, christmas, tape, force, church, attack, appreciate, hungry, standing, college, present, dying, prison, missing, charge, board, truck, public, gold, staying, calm, ball, hardly, hadn, missed, lead, government, island, cover, horse, reach, joke, french, fish, star, surprise, moved, mike, america, soul, self, seconds, movies, dress, club, putting, price, saved, listening, lots, cost, smell, mark, peace, gives, crime, entire, dreams, single, usually, department, holy, beer, west, stuck, wall, protect, nose, teach, ways, forever, awful, grow, train, type, billy, rock, detective, walking, dumb, planet, papers, beginning, folks, attention, park, card, hide, birthday, test, share, reading, starting, master, lieutenant, partner, field, enjoy, twice, mess, film, blame, bomb, dollar, loves, round, south, girlfriend, gentlemen, records, using, plenty, especially, evidence, silly, experience, admit, normal, fired, talkin, notice, mission, fighting, memory, lock, louis, wedding, crap, promised, guns, idiot, marriage, glass, orders, ground, impossible, heaven, knock, hole, spent, green, animal, neck, wondering, press, drugs, nuts, position, broken, names, jerry, asleep, acting, visit, feels, plans, boyfriend, wind, tells, paris, smoke, cross, gimme, holding, sheriff, walked, mention, brothers, double, judge, writing, code, pardon, keeps, fellow, closed, lovely, angry, fell, surprised, percent, cute, charles, bathroom, correct, agree, address, summer, ridiculous, andy, tommy, rules, account, note, group, learned, proud, laugh, sing, pulled, sleeping, colonel, upstairs, area, difficult, built, jump, river, betty, breakfast, bobby, bridge, dirty, locked, amazing, north, feelings, alex, plus, definitely, worst, accept, kick, file, gettin, wild, seriously, grace, steal, stories, advice, relationship, nature, places, contact, waste, spot, favorite, beach, stole, apart, knowing, faith, song, risk, level, loose, foot, played, eating, patient, action, washington, witness, turns, build, obviously, begin, split, crew, command, games, nurse, decide, keeping, tight, copy, runs, form, bird, complete, insane, arrest, scene, taste, jeffrey, consider, shoes, teeth, sooner, career, henry, devil, monster, weekend, heavy, gift, innocent, hall, showed, study, destroy, greatest, keys, track, comin, raise, danger, bruce, suddenly, hanging, carl, california, apologize, concerned, program, blind, seventy, chicken, sweetheart, medical, forward, drinking, willing, legs, suspect, shop, professor, admiral, guard, data, ticket, camp, tree, losing, goodnight, dunno, paying, murdered, burn, television, trick, possibly, senator, credit, extra, dropped, meaning, starts, warm, hiding, sold, stone, cheap, taught, marty, lately, simply, lookin, science, queen, following, majesty, jeff, corner, harold, duty, cars, training, heads, seat, discuss, noticed, helped, enemy, bear, common, screw, responsible)

val topics = topicIndices.map { case (terms, termWeights) =>

terms.map(vocabList(_)).zip(termWeights)

}

topics: Array[Array[(String, Double)]] = Array(Array((think,0.0015151627605402241), (just,0.001486317713687992), (tell,0.0011681552786581887), (like,0.0010054261952954218), (yeah,8.615063157995299E-4)), Array((just,0.0019868644391922855), (right,0.0012407287861833166), (make,0.001222938318274118), (didn,8.919429867442377E-4), (mean,8.877996244770699E-4)), Array((right,0.0021699398068533824), (want,0.0011749233194760728), (talk,9.013899165935946E-4), (tell,8.504816848342215E-4), (doing,8.252271581728695E-4)), Array((right,0.0052403657467525845), (okay,0.0042033140402619375), (just,0.003763558294118634), (going,0.003396547452689663), (like,0.003021645305713142)), Array((want,0.0033139517864565287), (think,0.0021697727562965366), (know,0.0021377421844192557), (just,0.0018117462306041533), (tell,0.0016275001664372737)), Array((know,0.0023854706148061567), (just,0.0019261905458742943), (like,0.0016576667581656617), (people,0.0011917968671869056), (think,0.0011248880469085086)), Array((know,0.004655511405730442), (think,0.002458768397723486), (like,0.0021078794201011583), (just,0.0017387793236628348), (time,0.0014236215935311085)), Array((know,0.0018921794022548181), (just,0.0018483719649855822), (want,0.0014386272597831232), (going,0.0014182374956709625), (yeah,0.0013764615715091382)), Array((like,0.0051946490753460636), (come,0.00495793071050275), (good,0.003580668739640197), (look,0.003184743897654473), (thank,0.002981524063809674)), Array((know,0.0021335471974973886), (like,9.625379885382163E-4), (tell,9.199660101772105E-4), (think,9.033959582006918E-4), (sorry,8.747265482123576E-4)), Array((shit,0.0025288929149109313), (hello,0.002359902253314637), (just,0.002261894922516035), (think,0.0018626814528567444), (know,0.0017576647100866413)), Array((think,0.007977613731363398), (just,0.0074256547675291915), (going,0.006054432245366723), (know,0.0047629498600014275), (good,0.004741787642644756)), Array((know,0.003854363383063756), (time,0.0024260532260151663), (just,0.0022162035474150243), (like,0.0018817267913487192), (good,0.0015001333324918243)), Array((going,0.0015776417631711249), (just,0.0014414685828715156), (like,0.0014279021959578673), (nice,0.0012813104112466842), (didn,8.294352348310147E-4)), Array((just,0.0036920461187951023), (like,0.00359354972907098), (know,0.002507896270867163), (right,0.0021850418950099), (said,0.0016447369107874818)), Array((going,0.002464771356929917), (know,0.0021840298145414266), (want,0.0013576445527878143), (money,0.0011473959400518262), (like,0.0011075469842802296)), Array((think,0.0019877776653926984), (just,0.0019088812832900985), (come,0.0014118885885063602), (know,0.0011336973397614043), (right,9.384609226085285E-4)), Array((know,0.003453703972841875), (like,0.00327608562414095), (right,0.0025174800837702693), (think,0.002218982477404188), (want,0.0017805786184620824)), Array((know,0.018645078429696073), (like,0.01154979812954807), (just,0.0093996276023053), (want,0.008649180109527853), (going,0.008565200297515204)), Array((know,0.019137707865734686), (just,0.014515895598928779), (like,0.012282554660302827), (right,0.010060286236470243), (yeah,0.009978216413893133)))

Feel free to take things apart to understand!

topicIndices(0)

res26: (Array[Int], Array[Double]) = (Array(4, 1, 9, 2, 8),Array(0.0015151627605402241, 0.001486317713687992, 0.0011681552786581887, 0.0010054261952954218, 8.615063157995299E-4))

topicIndices(0)._1

res27: Array[Int] = Array(4, 1, 9, 2, 8)

topicIndices(0)._1(0)

res28: Int = 4

vocabList(topicIndices(0)._1(0))

res29: String = think

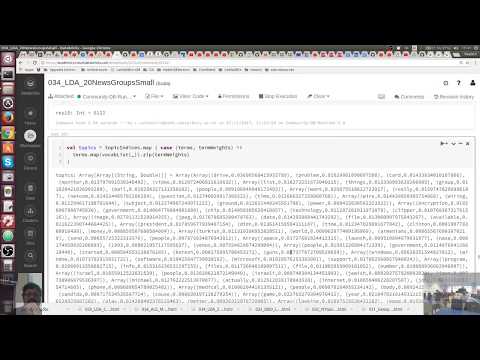

Review Results of LDA model with Online Variational Bayes - Doing all four steps earlier at once.

val topicIndices = ldaModel.describeTopics(maxTermsPerTopic = 5)

val vocabList = vectorizer.vocabulary

val topics = topicIndices.map { case (terms, termWeights) =>

terms.map(vocabList(_)).zip(termWeights)

}

println(s"$numTopics topics:")

topics.zipWithIndex.foreach { case (topic, i) =>

println(s"TOPIC $i")

topic.foreach { case (term, weight) => println(s"$term\t$weight") }

println(s"==========")

}